Today we have made some satisfying progress. The status so far on the different parts of our project is as follows.

Grid:

At the moment, we operate with three different colors on the floor, such that the NXT can differentiate between floor, line and intersection in our grid. We have a black line, yellow intersections and gray floor. This is not too good, because the floor and intersections is both brighter than the line. This results in problems when we want to distinguish between the two because it is possible for the light sensor to read a value that is similar to gray, when it is situated between the black line and a yellow intersection, hence both reads from black and yellow and gives a mean that "looks like" gray. Ie. readings can be ambiguous.

The solution to this problem is to create a grid where the line has the intermediate brightness between intersection and floor. For example, let the floor be brighter than the line and the intersections darker. In this environment, no ambiguous readings can occur.

NXT program:

The program running on the NXT is basically a big switch-statement.

It runs forever, and listens on a Bluetooth inbox. Depending on what message is delivered (from our .NET application) in this inbox, the NXT can turn left, turn right, follow the line to next intersection, turn around, pick up a ball, release the ball or leave the ball. (I might be missing some actions...)

This program is almost complete for our final application, and only minor correction/additions is required.

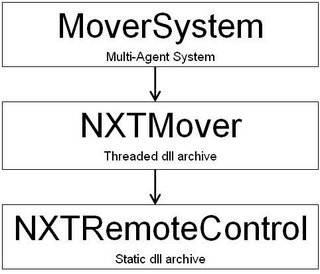

.Net application:

By now, we have an application that can control one NXT in the grid. It takes initial values, being NXT position and direction. For example, x=1, y=1, direction="North".

We can then input a new position, and the program will calculate a shortest path to this new position from it's current position. For this purpose we use an implementation of the heuristic algorithm A*. And then (of course) the NXT will go to this new position following the calculated path.

Quite nice to finally have something working "on the floor".

Now the real fun begins. We will implement a complex agent system with multiple agents that will cooperate to solve a task in the grid.